Algorithm Complexity Analysis

by mervyn

Control Statements

These are statements which potentially alter the execution of instructions

- Conditional statements

- if, switch

- Condition-controlled loops

- for, while, do-while

- Count-controlled loops

- for i from 1 to 10 do … end do; #Maple

- Collection-controlled loops

- foreach(int i in array){ … } //C#

Given

if(condition)

{

//true body

}

else

{

//false body

}

- The runtime of a conditional statement is:

- the run time of the condition (the test), plus

- the run time of the body which is run

- In most cases, the run time of the condition is \(\Theta(1)\) (constant)

- In some cases, it is easy to determine which statement must be run:

- In others, it is less obvious if vs. switch Switch statements appear to be nested if statements:

switch(i){

case 1: /* do stuff */ break;

case 2: /* do other stuff */ break;

case 3: /* do even more stuff */ break;

case 4: /* well, do stuff */ break;

case 5: /* tired yet? */ break;

default: /* do default stuff */

}

Thus, a switch statement would appear to run in \(O(n)\) time where n is the number of cases, the same as nested if Statements

- Why then not use:

if(i==1){/* do stuff */}

else if(i==2)(/* do other stuff */)

else if(i==3)(/* do even more stuff */)

else if(i==4)(/* well, do stuff */)

else if(i==5){/* tired yet? */}

else {/* do default stuff */}

Switch statements were included in the original C language. Why would you introduce something into programming language which is redundant?

- First, you may recall that the cases must be actual values, either: - integers - characters

For example, you cannot have a case with a variable, e.g., - case n: /* do something */ break; //bad

- The compiler looks at the different cases and calculates an appropriate jump

For instance, assume:

- the cases are 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10

- each case requires a maximum of 24 bytes (for example, six instructions)

- Then the compiler simply makes a jump size based on the variable, jumping ahead either 0, 24, 48, 72, …, or 240 instructions

Analysis of Statements

In this case, we do not know

- If we had information about the distribution of the entries of the array, we may be able to determine it

- if the list is sorted (ascending) it will always be run

- if the list is sorted (descending) it will be run once

- if the list is uniformly randomly distributed, then?

- It will be discussed at the end of this topic

Condition-controlled loops

The initialization, condition, and increment usually are single statements running in \(\Theta(1)\)

for(int i=0; i< n; i++)

{

//... code which is $$\Theta(f(n))$$

}

Assuming there are no break or return statements in the loop, the run time is \(\Omega(n)\)

- If the body does not depend on the variable (in this example, i), then the run time of for() is \(\Theta(n f(n))\)

- If the body is \(O(f(n))\), then the run time of the loop is \(O(n f(n))\)

int sum=0; for(int i=0; i<n; i++){ sum +=1; //$$\Theta(1)$$ (constant) }This code has run time: \(\Theta(n\cdot 1)=\Theta(n)\) (linear time)

int sum =0;

for(int i=0; i<n; i++){ //n times

for(int j=0; j<n; j++){

sum +=1; //$$\Theta(1)$$ (constant) times n

}

}

This code has run time: \(\Theta(n\cdot n\cdot 1)=\Theta(n^2)\)

Analysis of Repetition Statements

Suppose with each loop, we use a linear search an array of size m

for(int i=0; i<n; i++){

//search through an array of size m

//$$O(m)$$;

}

In the worst case, the complexity of this search would looking at all m values, but it could end early. Therefore Big O notation rather than \(\Theta\).

- The inner loop is \(O(m)\) and thus the outer loop is \(O(nm)\)

- If the body depends on the variable (in this example, i), then the run time of

for(int i=0; i<n; i++){

//code which is $\Theta(f(i, n))$

}

is \(\Theta \left ( 1+\sum_{i=0}^{n-1}1+f(i, n) \right )\)

-

- If the body is \(O(f(i,n))\), the result is \(O \left ( 1+\sum_{i=0}^{n-1}1+f(i,n) \right )\)

- The code at the inner loop may sometimes be dependent on i, the variable that controls the loop. This is just a loop regarding i, we can represent how many times the operation is running with sigma.

int sum=0;

for(int i=0; i<n; i++){

for(int j=0; j<i; j++){

sum +=i+j;

}

}

- The inner is \(O(1+i(1+1))=\Theta(i)\) hence the outer is \(\Theta \left ( 1+\sum_{i=0}^{n-1}1+i \right )=\Theta \left ( 1+n+\sum_{i=0}^{n-1}i \right )=\Theta \left ( 1+n+\frac{n(n-1)}{2} \right )=\Theta(n^2)\)

int sum=0;

for(int i=0; i<n; i++){

for(int j=0; j<i; j++){

for(int k=0; k<j; k++){

sum +=i + j + k;//const

}

}

}

- From inside to out: \(\Theta(1) \Theta(j) \Theta(i^2) \Theta(n^3)\)

Serial Statements

Consider the following two problems:

- search through a random list of size n to find the maximum entry, and

- search through a random list of size n to find if it contains a particular entry

What is the proper means of describing the run time of these two algorithms?

- Searching for the maximum entry requires thBat each element in the array be examined, thus, it must run in \(\Theta(n)\) time

- Searching for a particular entry may end earlier: for example, the first entry we are searching for may be the one we are looking for, thus, it runs in \(O(n)\) time (worst case scenario: n times) Therefore,

- If the leading term is \(\Theta\), then the result must be \(\Theta\), otherwise

- Of the leading term is big-O, we can say the result is big-O: for example,

- \[O(n)+O(n^2)+O(n^4)=O(n+n^2+n^4)=O(n^4)\]

- \[O(n)+\Theta(n^2)=\Theta(n^2)\]

- \[O(n^2)+\Theta(n)=O(n^2)\]

- \[O(n^2)+\Theta(n^2)=\Theta(n^2)\]

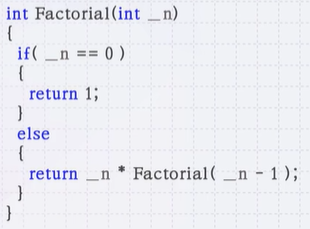

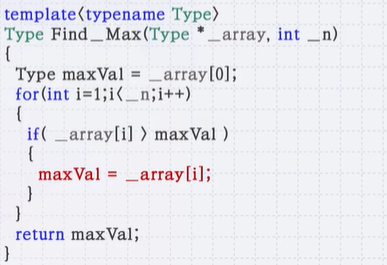

Functions

A function (or subroutine) is code which has been separated out, either to:

- repeated operations

- e.g., mathematical functions

- group related tasks

- e.g., initialization

Because a subroutine (function) can be called from anywhere, we must:

- prepare the appropriate environment

- deal with arguments (parameters)

- jump to the subroutine

- execute the subroutine

- deal with the return value

- clean up

Fortunately, this is such a common task that all modern processors have instructions that perform most of these steps in one instruction.

- Thus, we will assume that the overhead required to make a function call and to return is \(\Theta(1)\)

- Because any function requires the overhead of a function call and return, we will always assume that \(T_{f}=\Omega (1)\)

- That is, it is impossible for any function call to have a zero run time

- Thus, given a function \(f(n)\) (the run time of which depends on n) we will associate the run time of \(f(n)\) by some function \(T_{f}(n)\)

- We may write this to \(T(n)\)

- Because the run time of any function is at least \(O(1)\), we will include the time required to both call and return from the function in the run time

source “K-MOOC 허재필 교수님의 <인공지능을 위한 알고리즘과 자료구조: 이론, 코딩, 그리고 컴퓨팅 사고> 강좌의 6-2 알고리즘의 복잡도 분석 중(http://www.kmooc.kr/courses/course-v1:SKKUk+SKKU_46+2020_T1)”

tags:

Comments

Post comment